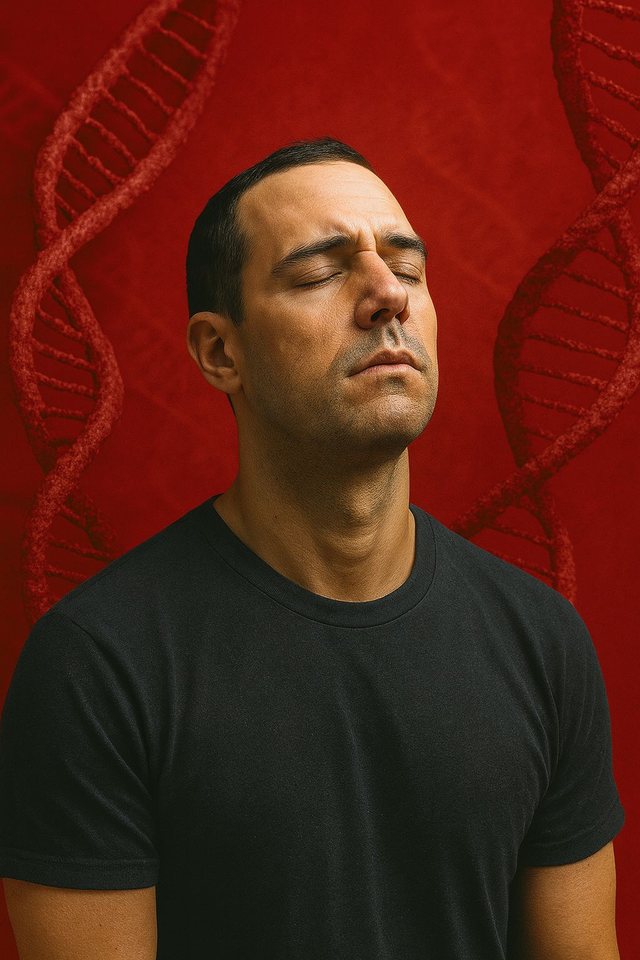

Evolution has given humanity five basic senses, but a new mathematical study suggests that memory works best in a world with seven. Researchers from Skoltech, in collaboration with researchers in the United Kingdom and Russia, have modeled how memories are formed as “engrams” – sparse networks of neurons that fire together in different areas of the brain. In this model, each concept exists in a multidimensional “conceptual space,” defined by features associated with the senses.

The team showed that when engrams are repeatedly reactivated by sensory input, they evolve into a stable state. At this point, the capacity of memory—that is, how many different concepts can be kept distinct—depends on the number of features used to encode them. The most surprising result was that capacity peaks when concepts are represented by seven features, not five or eight. In other words, a seven-dimensional conceptual space maximizes memory’s ability to keep engrams separate.

This conclusion, published in Scientific Reports, was consistent regardless of the details of the model, suggesting that the “seven” comes from the mathematics of interactions between engrams, rather than from any particular biological assumption. The researchers emphasize that this does not mean that humans have two hidden senses; although mechanisms such as proprioception (the sense of body position) or gut signals are mentioned, the study only deals with independent channels that help partition memories in theory.

The practical significance is clear: if seven independent inputs maximize memory sharing, then neuromorphic chips, robots, and artificial intelligence systems can be designed to process seven separate data sources, increasing conceptual resolution without increasing the cognitive space they use.